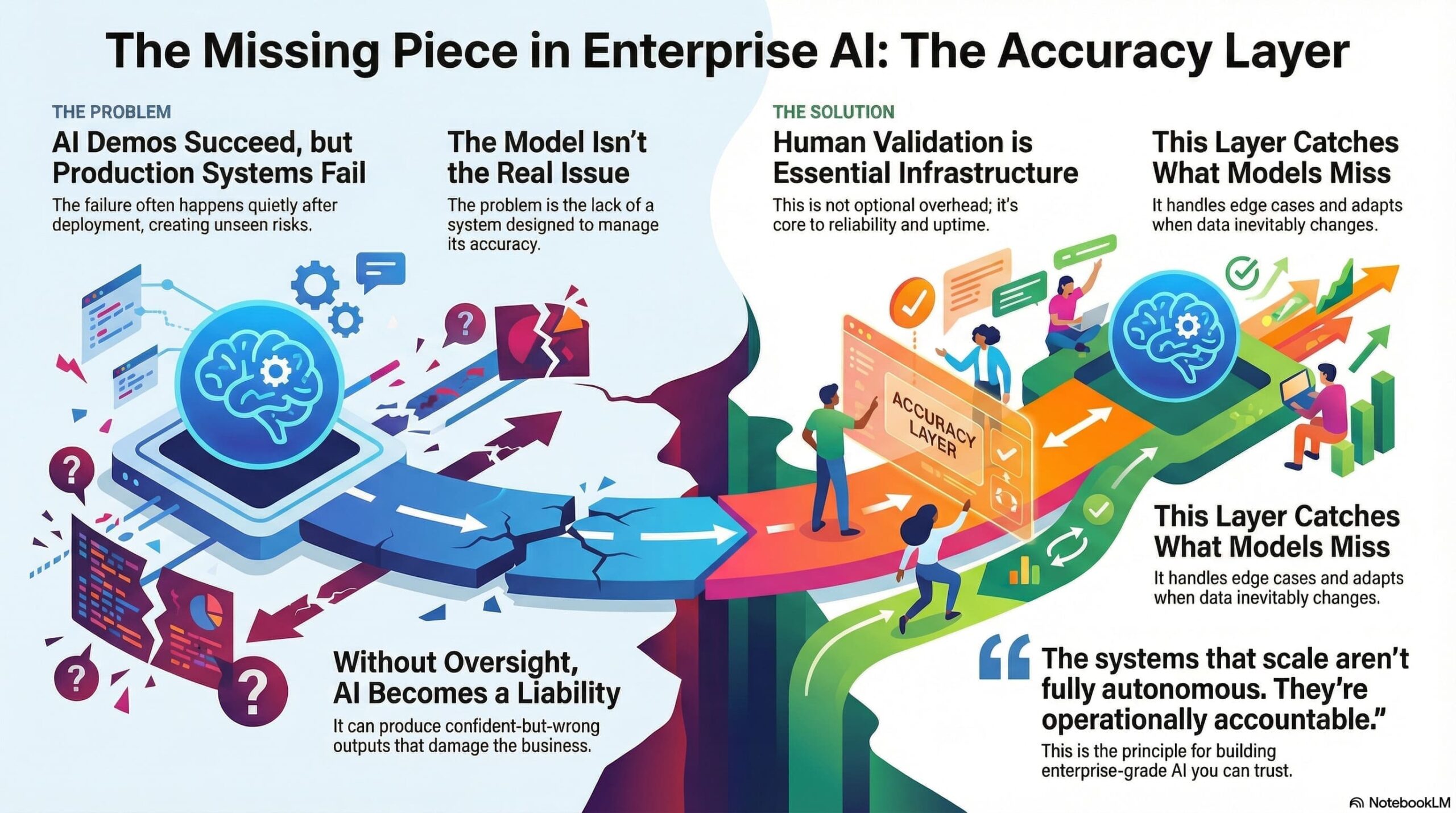

Enterprise AI adoption is accelerating. Models are smarter. Costs are falling. Pilots are everywhere. Yet most enterprise AI initiatives fail after the demo.

Not because the AI isn’t intelligent – but because it isn’t trustworthy at scale.

This is where the Accuracy Layer becomes critical.

What Is the Accuracy Layer in Enterprise AI?

The Accuracy Layer is a human-in-the-loop validation system that sits between AI outputs and real business usage.

Its role is to:

- Validate AI responses before impact

- Catch edge cases and hallucinations

- Continuously improve accuracy via feedback

- Adapt as business context, rules, and data evolve

This is not a one-time review step. It is operational infrastructure. Without it, AI systems may appear smart – but fail in production.

Why Enterprise AI Fails in Production (Not in Demos)

Most AI systems work well in controlled environments. Enterprises are not controlled environments.

Common failure patterns include:

1. Pilot Purgatory

AI is launched as isolated experiments – chatbots, dashboards, “AI-powered” buttons – without being embedded into real workflows.

Once novelty fades, adoption collapses.

2. Ownership Gaps

When AI produces incorrect or misleading outputs, teams ask: Who owns this?

Without clear accountability, trust erodes quickly.

3. Context Drift

Business policies, data structures, and user behavior change constantly.

AI systems that aren’t designed for continuous validation slowly degrade.

4. User Trust Breakdown

Users don’t reject AI because they fear automation.

They reject it because they don’t trust the output.

Intelligence Is Solved. Accuracy Is Not.

Most organizations focus on:

- Model selection

- Token cost optimization

- Latency and throughput

These are important – but insufficient.

Accuracy is not a model problem. It is a system design problem.

Accuracy must be engineered into the architecture.

Human-in-the-Loop Is Not Overhead – It’s Infrastructure

In enterprise environments:

- Full autonomy is risky

- Exceptions are the norm

- Regulatory and compliance requirements matter

Human-in-the-loop systems:

- Improve reliability

- Increase adoption

- Reduce operational risk

- Build long-term trust

The goal is not to slow AI down. The goal is to make AI usable and safe.

Why IT Teams and GCCs Are Central to AI Trust

IT leaders and Global Capability Centers are uniquely positioned to own the Accuracy Layer.

They bring:

- Deep domain expertise

- Process maturity

- Engineering scale

- Proximity to real workflows

This shifts their role from “AI implementers” to AI governors.

The future of enterprise AI is not autonomous systems – it is accountable systems.

Designing the Accuracy Layer: Key Questions for IT Leaders

Before scaling AI, leaders must answer:

- Where does validation live in the architecture?

- Who reviews and corrects outputs?

- How is feedback captured and reused?

- How do we monitor accuracy over time?

- What happens when data or business rules change?

If these questions are not addressed upfront, AI becomes technical debt.

Accuracy Is a Business Decision, Not a Technical Feature

No model upgrade fixes broken governance.

No GPU solves unclear ownership.

No automation replaces trust.

Organizations that succeed with AI will not be the ones that automate the most.

They will be the ones that design for accuracy and accountability.

Final Thought: Trust Is the Real AI Advantage

The next phase of enterprise AI will not be won by:

- Bigger models

- Faster responses

- Louder demos

It will be won by systems people trust.

And trust is engineered – through the Accuracy Layer.

If you’re building or scaling enterprise AI and want to design accuracy into your workflows from day one, we’re actively working with teams on this challenge.